Gamer’s Revanche

September 3rd, 2014 | Published in Art and Literature, Feminism, Games, Political Economy, Politics

There was a time when I might have called myself a "gamer." That is, I'm someone who plays and thinks about video games, and views them as a rich cultural form full of potential, both as art and as sport.

Now, however, even people who usually ignore games have been introduced to the figure of the "gamer," and he is something entirely different. The gamer is threatened by women who share his tastes, and calls them ["fake geek girls"](http://www.themarysue.com/on-the-fake-geek-girl/). The gamer reacts to Anita Sarkeesian's [criticism](https://www.youtube.com/watch?v=5i_RPr9DwMA) of sexist tropes in video games with a [bombardment](http://www.theverge.com/2014/8/27/6075179/anita-sarkeesian-says-she-was-driven-out-of-house-by-threats) of violent threats against her and her family. The gamer attacks feminist game creator Zoe Quinn with misogynist abuse and baseless allegations of corruption in reaction to a nasty blog post by a [bitter ex-boyfriend](http://www.theverge.com/2014/8/27/6075179/anita-sarkeesian-says-she-was-driven-out-of-house-by-threats).

It is not news that video games are often hostile to women, both as an industry and as a fan culture. Nor is it new that there are excellent feminist critics pointing this out within the games press, like [Leigh Alexander](http://www.gamasutra.com/view/news/224400/Gamers_dont_have_to_be_your_audience_Gamers_are_over.php) and [Samantha Allen](http://www.thedailybeast.com/articles/2014/08/28/will-the-internet-ever-be-safe-for-women.html). But the latest debates over misogyny and games have boiled over with new intensity in discussions among game consumers and creators, and have also reached beyond these circles. The New Inquiry has rounded up a collection of [links](http://thenewinquiry.com/features/tni-syllabus-gaming-and-feminism/) for those who need to get up to date.

Evidently not everyone with a deep interest in games is a bitter, reactionary young man who reacts with violent misogyny to even the hint of social justice. But that faction of "gamers" has demonstrated its outsize ability to police the boundaries of debate and to drive out consumers, creators, and critics who challenge them, with the consent of a silent majority. What, politically, does this specific right-wing demographic represent?

The culture of video games has long been a fairly insular one---as has, to a greater or lesser extent, the wider "geek culture" in which it has been embedded, encompassing phenomena like Dungeons and Dragons, science fiction and fantasy novels and movies, and comic books. All of these forms have long histories of politically subversive, socialist, and feminist experimentation. But in their best-funded and most widely consumed commercial forms, they have especially catered to certain kinds of socially awkward boys and men, providing them with alternatives to dominant standards of masculinity.

At the same time, however, they cultivated an alternative misogyny, based on resentment of other men and a desire to usurp their patriarchal dominance, rather than overturn patriarchy entirely. Hence the geek culture is a [breeding ground](http://www.thedailybeast.com/articles/2014/05/27/your-princess-is-in-another-castle-misogyny-entitlement-and-nerds.html) for [Nice Guys](http://geekfeminism.wikia.com/wiki/Nice_guy_syndrome) who see themselves as persecuted outcasts but are unable to get over their desire to control women.

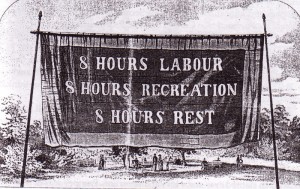

It's impossible to dispute anymore that gaming is a completely mainstream mass-culture phenomenon in purely economic terms: consumer spending on games now [rivals or exceeds](file:///home/pefrase/Downloads/Global_Media_Report_2013.pdf) spending on music and movies. And yet these gamers cling to an identity as marginalized underdogs, even as they defend the game industry's existing practices of sexism, racism, and class exploitation.

Part of this has to do with the lag between economic and cultural acceptance. Games may be mainstream as an industry, but they have not yet achieved cultural parity with other media and other art forms. So we still get great film critics writing bumbling [rants](http://www.rogerebert.com/rogers-journal/video-games-can-never-be-art) about why video games can't be art, and the *New York Times* expressing wonderment at the notion that competitive sports can be [mediated](http://www.nytimes.com/2014/08/31/technology/esports-explosion-brings-opportunity-riches-for-video-gamers.html) by computers.

This is not unusual for any young medium; cinema and television faced similar lags. Eventually, people who grew up with games will be in positions of cultural authority, and the idea of games as an inferior or ephemeral medium will disappear.

The assimilation of games into the larger culture poses a problem for a reactionary segment of gamers, however. It means engaging with a society that, while it is still capitalist and patriarchal, still suffused with racism, has also been challenged for decades by those it has traditionally marginalized. Wider engagement inevitably [changes](http://www.vice.com/read/dungeons-and-dragons-has-caught-up-with-third-wave-feminism-827) the parameters of geek culture, as new voices and new ideas are incorporated. Some gamers would like it both ways: they want everyone to take their medium seriously, but they don't want anyone to challenge their political assumptions or call into question the way games treat people who don't look and think like them. They hate and fear a world where games are truly made by and for everyone; where women make up a [majority](http://www.dailydot.com/geek/adult-women-largest-gaming-demographic/) of the gaming audience; where a [trans woman](http://wiki.teamliquid.net/starcraft2/Scarlett) dominates one of the world's great eSports.

It's important to call these people what they are: not just anti-social jerks and not only misogynists, but as Liz Ryerson [says](http://ellaguro.blogspot.com/2014/08/on-right-wing-videogame-extremism.html), overall the *right wing* of people involved in games. No surprise, then, that they resemble conservatives who resentfully bemoan the liberal bias of Hollywood or the condescension of elite college professors. This isn't a problem with gamer culture. It's a problem with our entire culture, and specifically with the attitudes and behavior of a rightist, predominantly white and male section of that culture.

Right wing gamers project an overweening sense of superiority and entitlement, while at the same time constructing an identity based on marginality and victimization. In this, though, they aren't really that different from many revanchist movements in capitalist societies. They're much like the Tea Party right, which laments the disappearance of the America it recognizes---that is, the America where straight white men are systematically advantaged. This is a basic element of the [reactionary mind](http://coreyrobin.com/new-book/): a fundamental opposition to equality as such. So it is with gamers for whom, as Tim Colwill [puts it](http://games.on.net/2014/08/readers-threatened-by-equality-not-welcome/), "the worst possible thing that can happen here is equality." This group of angry gamers no longer "recognizes their country," as it were, what with all these women and queers and leftists running around.

This is why it's wrong to suggest, as [Ian Williams](https://www.jacobinmag.com/2014/09/death-to-the-gamer/) does, that gamer culture's fatal flaw is to be "tainted, root and branch, by its embrace of consumption as a way of life." The idea that communities organized around shared cultural consumption are inherently reactionary is so broad as to be vacuous, and it could apply equally to movie buffs, sports fans, or Marxist theory aficionados. It's possible for any politics, left or right, to devolve into mere consumption choices. But that is not the problem currently on display among gamers. Indeed, the danger arises from their choice *not* to just passively consume, and to lash out in defense of what they believe "true" gamer culture should be.

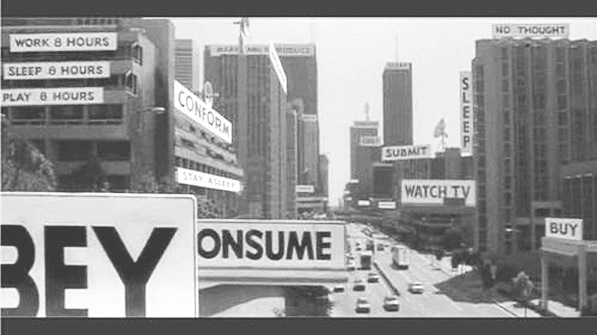

The attacks on people like Anita Sarkeesian should be understood as collective political acts, and the reactionaries who carry them out should be understood as ideological representatives of a specific political tendency among those who create and play games, rather than waved off with moralizing Adbusters-ish rhetoric as a bunch of consumer dupes. What threatens these gamers is the notion that gaming does not exist only to reassure their misogynist preconceptions, and that they may have those premises challenged. For not only is the culture of games broadening, but even the big-budget commercial segment that most caters to the backward fantasies of these young men is contracting relative to other parts of the industry, like indie, mobile, and web games.

As [Leigh Alexander](http://www.gamasutra.com/view/news/224400/Gamers_dont_have_to_be_your_audience_Gamers_are_over.php) points out in her more sophisticated deconstruction of the "gamer" identity, "It's hard for them to hear they don't own anything, anymore, that they aren't the world's most special-est consumer demographic, that they have to share." Change the words "consumer demographic" to "beneficiaries of the welfare state," and you could be talking about Tea Partiers defending their Medicare while denouncing welfare queens.

So this is not just a story about gamers. And within the boundaries of the games world, it is also not merely a story about a "toxic culture" among game fans, but rather about an industry that is structurally and systematically reactionary, and cultivates the same values among a segment of its consumers. It's not just 4chan mobs terrorizing writers and game designers, it's a games business that [pushes out](http://shawnelliott.blogspot.com/2013/05/leigh-alexander-and-i-agreed-to-move.html) workers who don't fit its political assumptions and demographic stereotypes, by way of the same sexist practices that [pervade](http://www.katelosse.tv/latest/2014/4/13/the-speculum-of-the-other-brogrammer) the tech industry generally.

Famous game designers and studio owners won't openly endorse the threats and terror of anonymous trolls, but those trolls are the shock troops that help keep the existing elite in power. The respectable men in suits will continue to hire the same boy's club while making excuses for why women just don't fit in as programmers or game designers or journalists. But the fascistic street-fighting tactics of the troll brigade work in the service of keeping everything in the industry the way it is.

Not only is it a useful tool for shutting down dissenting voices, the existence of these angry-nerd movements among fans and consumers does what fascistic movements always do: divide the working class by getting some of them to identity with the boss, which in this case serves to shore up the [hyper-exploitative](https://www.jacobinmag.com/2013/11/video-game-industry/) industry that Ian Williams has elsewhere described. The existence of a vociferously hostile vigilante squad shutting down dissenting speech makes it easier for studio heads to hire nothing but the same white men and then work them to death, for forum administrators to claim free speech and shrug at the hatred spewed on their pages, and for the industry to claim that they're only satisfying "the audience" when they reproduce the same narrow and bigoted tropes year after year. Meanwhile the "good" geeks get distracted from the main event as they tussle with the trolls, like [SHARPs](https://en.wikipedia.org/wiki/Skinheads_Against_Racial_Prejudice) and Nazi skinheads brawling at a basement show.

Which isn't to say that death threats are a great look for the suits at the top of the game industry hierarchy. The trolls may sometimes get out of control, just as the Republican establishment sometimes loses control of the Tea Party, or the industrial capitalists sometimes lose control of the Nazi brownshirts. But that doesn't mean they aren't part of one dialectically inter-related political project. [The Cossacks work for the Czar](http://crookedtimber.org/2009/01/27/the-cossacks-well-they-work-for-the-czar/). The street fighters are there to police the boundaries of discourse, to forcibly drive out anyone who challenges the existing hierarchy---women, people of color, LGBT people, even the odd white man deemed to be [too sympathetic](http://www.dailydot.com/geek/4chan-hacks-phil-fish-over-his-defense-of-zoe-quinn/) to the women and the commies.

Gaming doesn't have a problem; capitalism has a problem. Rather than seeing them simply as immoral assholes or deluded consumerists, we should take gaming's advanced wing of hateful trolls seriously as representatives of the reactionary shock troops that will have to be defeated in order to build a more egalitarian society in the games industry or anywhere else.