Breaking the Norm

November 2nd, 2018 | Published in Everyday life, Politics, Socialism, xkcd.com/386

I was pleasantly surprised with the response to my last post (thanks Red Wedge and Commune and the Art and Labor podcast). But there was one specific misinterpretation that I want to try to clarify. It arises specifically among the "normie" socialists I was targeting in my previous intervention, and it pertains to just what it means for the left to be a "subculture".

I saw multiple people state that in calling for a left that was openly weird, I was calling for a left that set itself apart as a "subculture" that self-consciously stands against the values and norms of the "mainstream" working class culture. And that is, indeed, what the apostles of the normcore left often accuse people like me of doing. But it is not at all what I was trying to do. I wasn't trying to oppose the normal, I was trying to abolish it. I'll try to explain that better in what follows.

It's telling that a certain portion of my readership---and it seems to be disproportionately other white men---sees me talking about trans people, or black people, or queer people, and immediately begins thinking in terms of "subculture". I think this arises from a genuine blind spot and not, in most cases, a conscious bias. The reflex---and it is one that does not only occur in white men, to be sure---is to implicitly associate "mainstream" with whiteness, maleness, cisness, heterosexuality, and the traditional family.

It was this bias that I was critiquing, and that Kate Griffiths critiques in the "what is normal" passage I quoted in my last post. Moreover, both of us were making the point that the "normal" is really a set of expectations that are imposed on the working class by a patriarchal capitalist system. And the real working class, far from adhering strictly to those expectations, is constantly in a state of either failing to live up to them, or not even wanting to try.

So the point is not to set the left up as its own separate subculture. Rather, the point is to embrace the full diversity and weirdness of working class culture as it already exists and to embrace that, and accept the inherent weirdness of then adding to that a politics that demands the overthrow of the entire capitalist mode of production. All the variegated parts of the working class have to come together to form the only subculture that matters: a class implacably opposed to capital, rejecting the powers of accumulated wealth. Composing that class will not happen by subsuming all of our various subjectivities under some blank normality. A lot of people intuitively get that; how else to explain socialists' infatuation with the juggalos?

And in any case, normie is itself a subculture. In a Facebook thread discussing my post, Jesse Kudler notes that in many cases:

Young white educated people actually universalize from their rather particular circumstances and orientation. So being a queer communist or whatever becomes "weird" or "a subculture," but being a white 20-something with an advanced degree who likes Chapo and spends a lot of time shitposting on Twitter is "normal" and "universal" when in fact it's clearly actually its own hyper-specific sub-culture.

As Kudler said in a later post, learning Roberts Rules of Order is itself very weird subcultural behavior. But people don't see it this way, often because they come from a demographic background that has been socialized to think of whatever it is they do as "normal".

One consequence of this mental habit is that it inhibits the ability to distinguish between not centering some particular part of the working class, versus actually dismissing or attacking that class fragment.

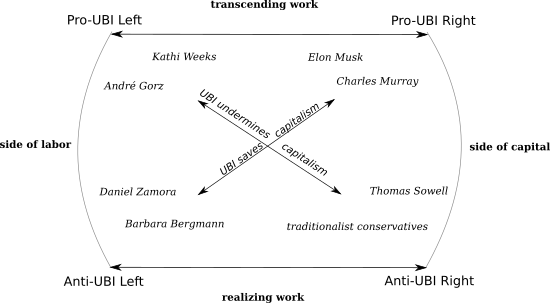

This came up in the debate I referenced earlier, about Asad Haider's Mistaken Identity. In response to a poorly argued review at Jacobin, Samuel Schwartz responded with a clear explanation of what was wrong with the entire premise of an argument that says certain demands, like Medicare for All, are superior by virtue of being "universal".

The problem becomes immediately evident when Medicare for All is opposed to some supposedly "particularist" and therefore divisive demand such as abolishing ICE or abolishing prisons. What is it, exactly, that makes these demands particular? They do not demand abolishing ICE or prisons only for certain people, they simply demand that nobody should be deported or incarcerated.

The charge of particularism can only made to stick if it is taken to describe, not who is affected by a policy, but who is most perceived to be affected by it. And this brings us to another rhetorical move that is routine in normitarian universalist circles: the insistence that some "universal" demand like Medicare for all is "really" the most anti-racist or feminist demand, since in practice women and people of color will benefit the most from it.

There are two related problems with this. First, as an organizing strategy it amounts to a belief that white and male workers are fundamentally racist and patriarchal, and can only be won to socialism if they can be tricked into believing that they are not fighting for the interests of women or people of color. This is, I think, contrary to reality and historical experience, but worse than that it is self-reinforcing: a politics that deliberately avoids talking about race or gender will never be able to challenge racism and sexism and homophobia in its own ranks, and the movement and the class that forms around that politics will, in fact, be more reactionary than it might have been otherwise.

Moreover, after the interests of marginalized fractions are pushed aside, once "universal" demands quickly lose their universality. One can easily imagine a compromise version of Medicare for all that leaves out reproductive rights, or the trans health services that, as Fainan Lakha notes, are critical to a left-wing health care politics. And the very fact that health care delivery has those specificities, for particular groups, shows that all "universal" policies are particular in their implementation.

All of this is why all of our "subcultures" of the working class are important---and yes, that includes the subculture of straight white couples who want to form nuclear families and raise kids in the suburbs. What's objectionable about normie socialism isn't that some people desire that lifestyle. It's that they insist on making it the center of attention at the expense of everyone else. That's what many found odd about Jacobin's recent embrace of socialist pro-natalism arguments. On a policy level I find much to agree with in the linked articles. And I don't have anything against people who want to be in heteronormative couples and have children. I just have a hard time believing that such people represent a specially persecuted group on the left. More likely, I think some people get uncomfortable when their particular needs and desires aren't treated as if they are more normal or healthy or important than everyone else's.

A related problem came up in the debate over the Haider review that I mentioned above. What critics had to point out, whether explicitly or not, was that the entire premise of the call for universalism was patriarchal and white supremacist. That is, it measured universality not in terms of who a demand applies to, but in terms of how much the implied "normal worker" (white, male, cis, straight) could feel it applied to them. When this is pointed out it immediately leads to defensive reactions, because, unsurprisingly, a political tendency that assiduously avoids talking about race and gender is not very good at structural understandings of race and gender. Instead, people tend to fall back on the kind of liberal ascriptive politics they paint onto their opponents: an argument that a particular argument or strategy has racist premises gets turned into an accusation that the people making that argument are, themselves, irredeemably racist as people.

But the point here isn't to draw some kind of line in the sand dividing the woke from the backwards. Rather, it is to seriously investigate what it takes to actively take the working class as it exists in itself, in many different modes and combinations of everyday life, and combine and compose that into a fighting class for itself. That won't be done by ignoring the way we are divided into many "subcultures" that live class in different ways and uniting under a blank banner of the normal. It will be done by all of us learning to admit we aren't as normal as we might want to believe.